- AI

- core values

- exploration

•

•

-

Rails, Tailwindcss and Kamal on Apple Silicon

The default setup for tailwindcss-rails does not work with Apple Silicon. This post will show you how to fix it.

-

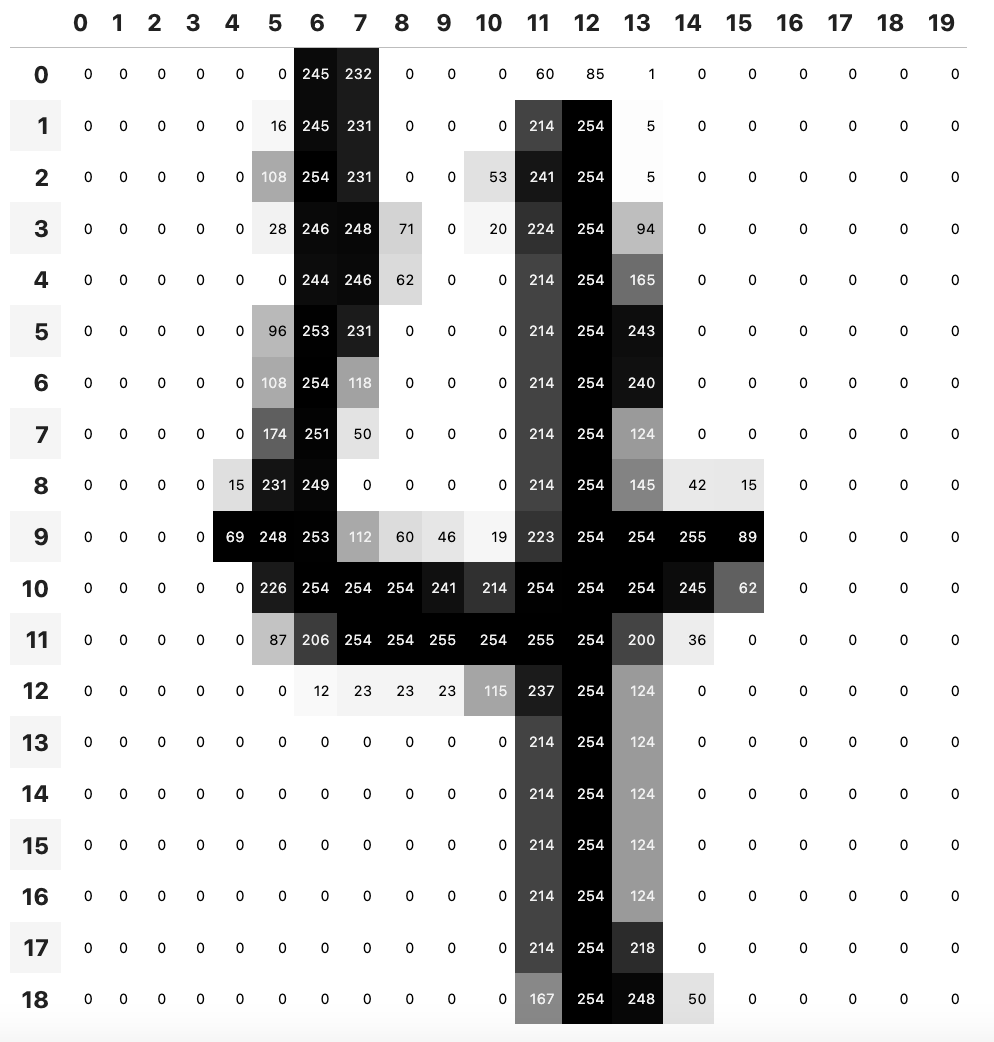

Stochastic Gradient Descent (SGD) with PyTorch and Fast.ai

Enhancing vanilla Gradient Descent performance to be able to converge on a minimum much quicker with Stochastic Gradient Descent (SGD).

-

From approximating math functions to computer vision

Let's look at how neural networks allow to solve predictions beyond quadratic functions.

-

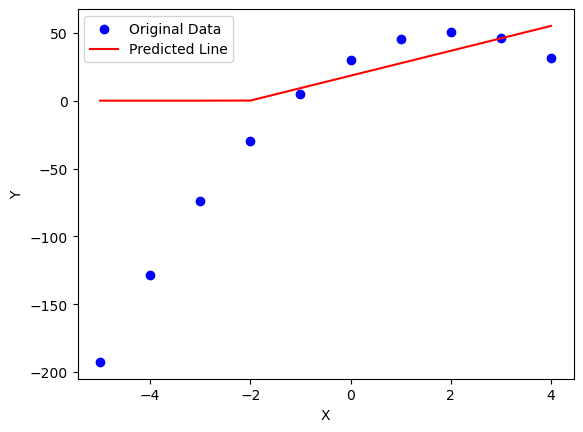

Estimate any function with Gradient Descent

Let's have a look how we can resolve more generic functions with Gradient Descent by combining linear functions and non-linear ones.

-

Gradient Descent from scratch

A deep-dive on how Gradient Descent works from the math behind it to a working Ruby sample.